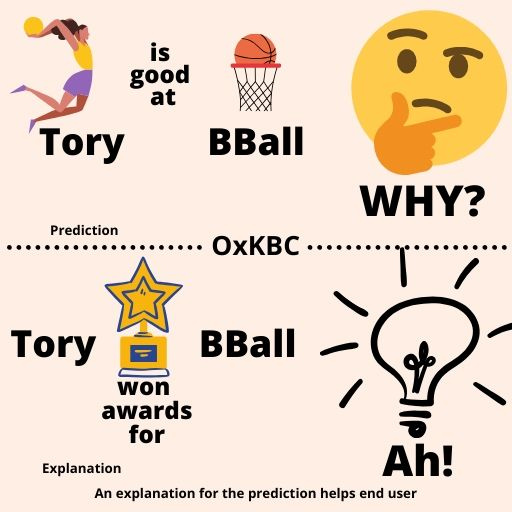

OxKBC: Outcome Explanation for Factorization Based Knowledge Base Completion

Yatin Nandwani, Ankesh Gupta, Aman Agrawal, Mayank Singh Chauhan, Parag Singla, Mausam

Keywords: xai, kbc, templates, outcome explanation, templates

TLDR: We present an outcome explanation engine for Factorization based KBC

Abstract:

State-of-the-art models for Knowledge Base Completion (KBC) are based on tensor factorization (TF), e.g, DistMult, ComplEx. While they produce good results, they cannot expose any rationale behind their predictions, potentially reducing the trust of a user in the model. Previous works have explored creating an inherently explainable model, e.g. Neural Theorem Proving (NTP), DeepPath, MINERVA, but explainability comes at the cost of performance. Others have tried to create an auxiliary explainable model having high fidelity with the underlying TF model, but unfortunately, they do not scale on large KBs such as FB15k and YAGO. In this work, we propose OxKBC -- an Outcome eXplanation engine for KBC, which provides a post-hoc explanation for every triple inferred by an (uninterpretable) factorization based model. It first augments the underlying Knowledge Graph by introducing weighted edges between entities based on their similarity given by the underlying model. In the augmented graph, it defines a notion of human-understandable explanation paths along with a language to generate them. Depending on the edges, the paths are aggregated into second-order templates for further selection. The best template with its grounding is then selected by a neural selection module that is trained with minimal supervision by a novel loss function. Experiments over Mechanical Turk demonstrate that users find our explanations more trustworthy compared to rule mining.