XREF: Entity Linking for Chinese News Comments with Supplementary Article Reference

Xinyu Hua, Lei Li, Lifeng Hua, Lu Wang

Keywords: Entity Linking, Chinese social media, Data Augmentation

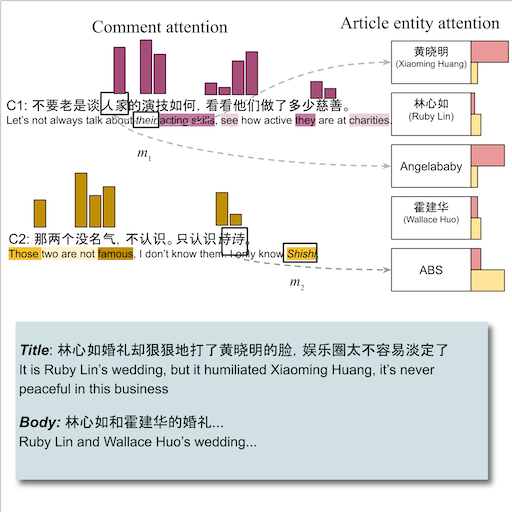

TLDR: We study entity linking for Chinese news comment and propose a novel attention based method to detect relevant context and supporting entities from reference articles.

Abstract:

Automatic identification of mentioned entities in social media posts facilitates quick digestion of trending topics and popular opinions. Nonetheless, this remains a challenging task due to limited context and diverse name variations. In this paper, we study the problem of entity linking for Chinese news comments given mentions' spans. We hypothesize that comments often refer to entities in the corresponding news article, as well as topics involving the entities. We therefore propose a novel model, XREF, that leverages attention mechanisms to (1) pinpoint relevant context within comments, and (2) detect supporting entities from the news article. To improve training, we make two contributions: (a) we propose a supervised attention loss in addition to the standard cross entropy, and (b) we develop a weakly supervised training scheme to utilize the large-scale unlabeled corpus. Two new datasets in entertainment and product domains are collected and annotated for experiments. Our proposed method outperforms previous methods on both datasets.